Evaluating surgical skills from kinematic data using convolutional neural networks

This is the companion web page for our paper titled "Evaluating surgical skills from kinematic data using convolutional neural networks".

This paper has been accepted at the International Conference on Medical Image Computing and Computer Assisted Intervention MICCAI 2018.

The source code

The software is developed using Python 3.6, it takes as input the kinematic data and predicts the subject's skill level. We trained the model on an NVIDIA GPU GTX 1080 (this is only necessary to speed up the calculations). You will need the JIGSAWS dataset to re-run the experiments of the paper. The source code can be downloaded here.

The archive contains one python file with the code needed to re-run the experiments. The hyper-parameters published in the paper are present in the source code. The empty folders (in the archive) are necessary so the code could run with no "folder not found error". The content of JIGSAWS.zip (once downloaded) should be placed in the empty folder "JIGSAWS".

To run the code you will also need to download seperatly and install the following dependencies:

- Keras 2.1.2

- Tensorflow 1.4.1

- Numpy 1.13.3

- Scikit-learn 0.19.1

- Pandas 0.21.1

- Matplotlib 2.0.0

- Imageio 2.2.0

We used the versions listed above, but you should be able to run the code with much recent versions. Different versions of the above-listed packages can be found in the archives of the packages' corresponding websites.

Video illustrating the method

Video showing side-by-side the trial recording and the on-going trajectory with heatmap coloring for subject (Novice) H's fourth trial for the suturing task.

The video is encoded using h.264 codec and mp4 containner : Suturing_H004.mp4

Visualizing the movements' contribution to the classification

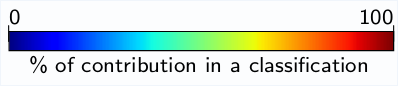

The proposed method uses the Class Activation Map to localize the regions of the surgical task and their corresponding contribution to a certain classification.

The functions that are used to visualize the trajectories are present in the source code.

Figure 2 in the paper, illustrates the trajectory of subject (Novice) H's movements for the left master manipulator.

The colors are obtained using the Class Activation Map values of a given class and then projected on the (x,y,z) coordinates of the master left hand.

You can find here the visualizations for all the subjects and all their trials for the Suturing task for each one of the five cross-validation folds.

Last update : May 2018